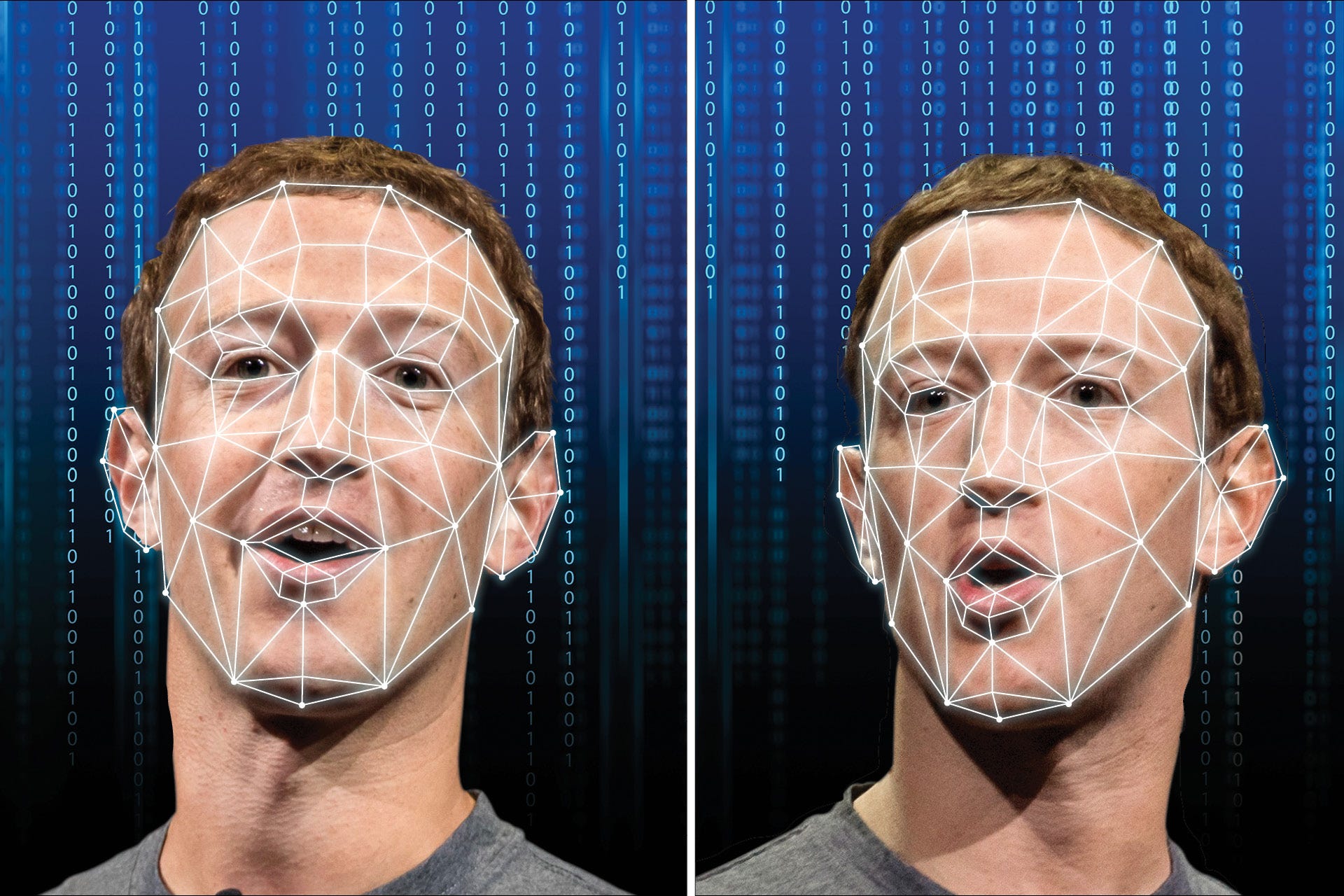

Deepfake technology is a type of artificial intelligence (AI) that can create realistic-looking videos and audio recordings of people saying or doing things they never did. It works by using AI to analyze a large amount of data, such as photos and videos of a person, to create a realistic model of their appearance and voice. Then, it uses another AI technique called generative adversarial networks (GANs) to generate new images and sounds that match the model, but with different expressions, movements, or words.

Deepfake technology has been around since 2017, when a Reddit user named Deepfakes posted doctored porn videos of celebrities on the site. Since then, the technology has become more accessible and advanced, thanks to the availability of open-source software, online tutorials, and cloud computing services. Anyone with a computer and an internet connection can now make a deepfake, using tools like DeepFaceLab, FaceSwap, or Zao.

Deepfake technology has many potential applications, both positive and negative. On the positive side, it can be used for entertainment, education, art, and social justice. For example, deepfakes can help actors play different roles, teachers create engaging lessons, artists express their creativity, and activists raise awareness about important issues. On the negative side, it can also be used for fraud, blackmail, harassment, and propaganda. For example, deepfakes can help scammers impersonate someone’s voice, blackmailers extort money, harassers spread revenge porn, and propagandists spread fake news.

The main challenge of deepfake technology is that it can undermine the trust and credibility of information, especially in the digital age. It can make it harder to distinguish between what is real and what is fake, and to hold people accountable for their actions. It can also violate the privacy and consent of the people whose images and voices are used without their permission. Therefore, it is important to develop ways to detect, regulate, and counter deepfake technology, and to educate the public about its risks and implications.

Some of the possible solutions to deal with deepfake technology are:

- Developing more effective techniques to detect deepfakes, such as using AI, blockchain, or digital watermarking.

- Creating laws and regulations to ban or limit the use of deepfakes for malicious purposes, such as defamation, identity theft, or election interference.

- Raising awareness and literacy about deepfake technology, such as teaching people how to spot deepfakes, verify sources, and report suspicious content.

Deepfake technology is a powerful and controversial innovation that can have both positive and negative impacts on society. It is up to us to use it responsibly and ethically, and to protect ourselves and others from its potential harms. Thank you for reading!